Schedule

| Time | ||

|---|---|---|

| 07:55 | Organizers Introductory Remarks |

|

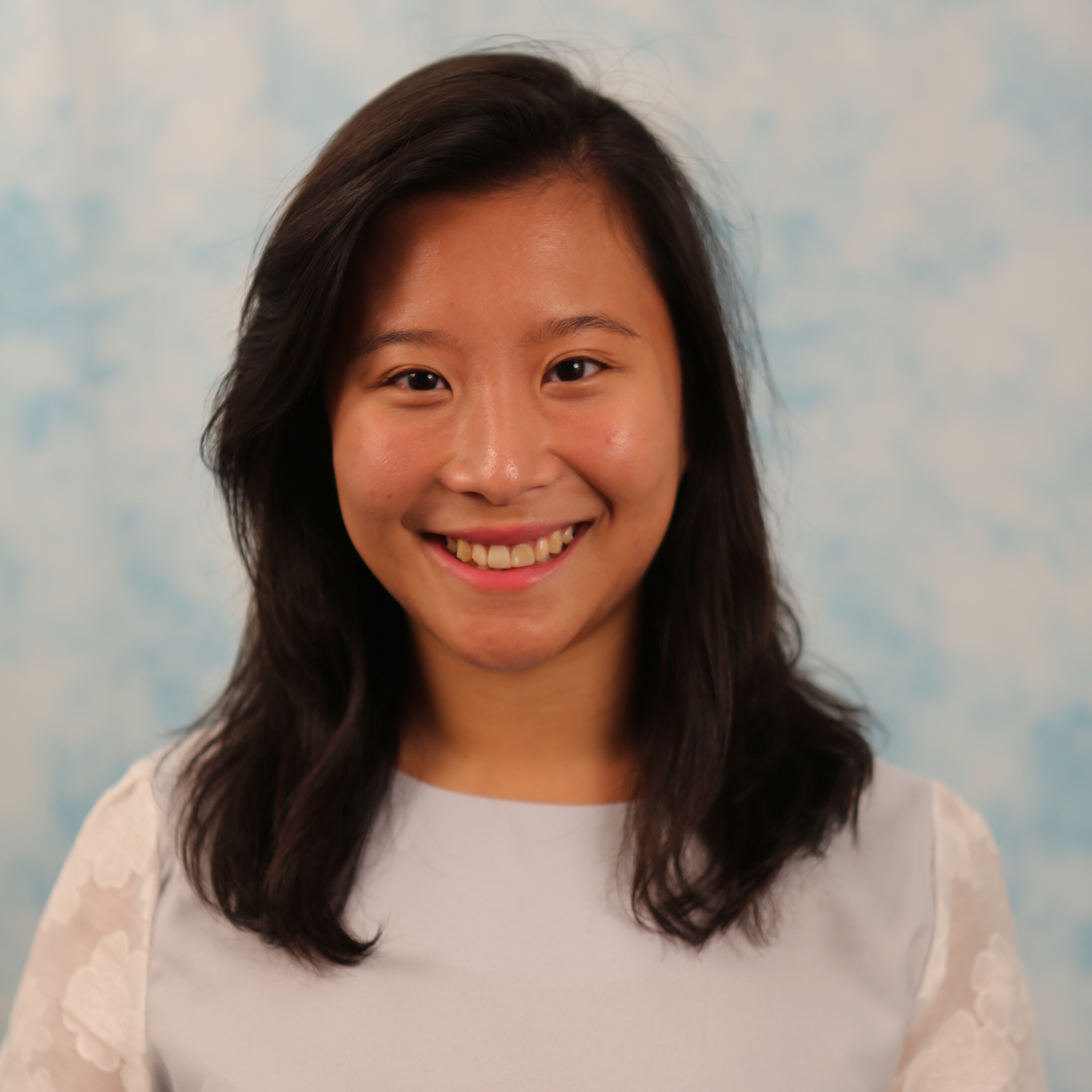

| 08:00 | Keynote 1: Jean Oh Title TBD Abstract

Abstract TBD

|

|

| 08:20 | Keynote 2: Georgia Chalvatzaki Title TBD Abstract

Abstract TBD

|

|

| 08:40 | Keynote 3: Abhishek Gupta Title TBD Abstract

Abstract TBD

|

|

| 09:00 | Oral Talks | |

| 09:20 | Coffee Break (+ Poster Session) Abstract

Abstract TBD

|

|

| 10:00 | Keynote 4: Ranjay Krishna Title TBD Abstract

Abstract TBD

|

|

| 10:20 | Keynote 5: Andreea Bobu Title TBD Abstract

Abstract TBD

|

|

| 10:40 | Keynote 6: Animesh Garg Title TBD Abstract

Abstract TBD

|

|

| 11:00 | Debate (all speakers) - What semantics *must* the data capture for efficient robot learning, and how important is direct human input in generating and curating that data? | |

| 12:00 | Organizers Closing Remarks |

Call for Papers

Submissions are handled through CMT: https://cmt3.research.microsoft.com/SEMROB2026

We will accept the official LaTeX or Word paper templates, provided by RSS 2025.

Our review process will be double-blind, following the RSS paper submission policy for Science/Systems papers.

All accepted papers will be invited for poster presentations; the highest-rated papers, according to the Technical Program Committee, will be given spotlight presentations. Accepted papers will be made available online on this workshop website as non-archival reports, allowing authors to also submit their works to future conferences or journals. We will highlight the Best Paper Award during the closing remarks at the workshop event.

Targeted Topics

In addition to the RSS subject areas, we especially invite paper submissions on various topics, including (but not limited to):- Neural architectures leveraging demonstrations as prompts

- Goal understanding through few-shot demonstrations

- Novel abstractions, representations, and mechanisms for few-shot learning

- Retrieval-augmentation mechanisms used in learning and task-execution

- Agentic frameworks for failure reasoning, self-guidance, test-time adaptation, etc.

- LLM-based action models for robot control; large action models

- Semantic representations for generalizable policy learning

- World models used for reasoning and optimization

Submission Guidelines

RSS SemRob 2025 suggests 4+N or 8+N paper length formats — i.e., 4 or 8 pages of main content with unlimited additional pages for references, appendices, etc. However, like RSS 2025, we impose no strict page length requirements on submissions; we trust that authors will recognize that respecting reviewers’ time is helpful to the evaluation of their work.Submissions are handled through CMT: https://cmt3.research.microsoft.com/SEMROB2026

(Required acknowledgement: the Microsoft CMT service was used for managing the peer-reviewing process for this conference. This service was provided for free by Microsoft and they bore all expenses, including costs for Azure cloud services as well as for software development and support.)

We will accept the official LaTeX or Word paper templates, provided by RSS 2025.

Our review process will be double-blind, following the RSS paper submission policy for Science/Systems papers.

All accepted papers will be invited for poster presentations; the highest-rated papers, according to the Technical Program Committee, will be given spotlight presentations. Accepted papers will be made available online on this workshop website as non-archival reports, allowing authors to also submit their works to future conferences or journals. We will highlight the Best Paper Award during the closing remarks at the workshop event.

Important Dates

- Submission deadline: TBD, 23:59 AOE.

- Author Notifications: TBD.

- Camera Ready: TBD.

- Workshop: 13 July 2026, 08:00-12:00 AET.